Hey everyone!

We are really excited to share that we have just released the alpha version of Prodigy v1.12! (v1.12a1).

This release is available for download for all v.1.11.x license holders and includes:

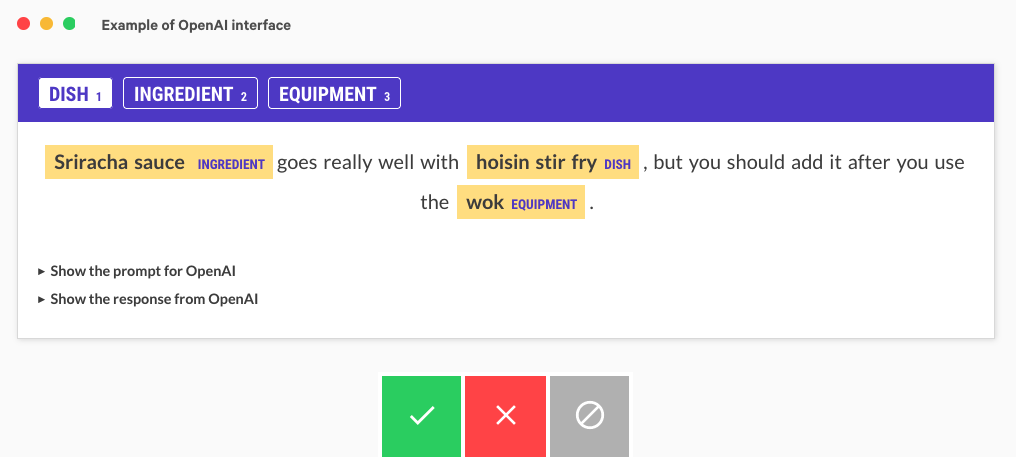

- New recipes for LLM-assisted annotations and prompt engineering: the LLM assisted workflows we have announced a while ago are now fully integrated with Prodigy and available out of the box. For

v1.12a1you'd still be restricted to OpenAI API to use them, but byv1.12we definitely want to leveragespacy-llmto enable more flexibility, notably using open source large language models. Please check out the alpha docs for the details and examples.

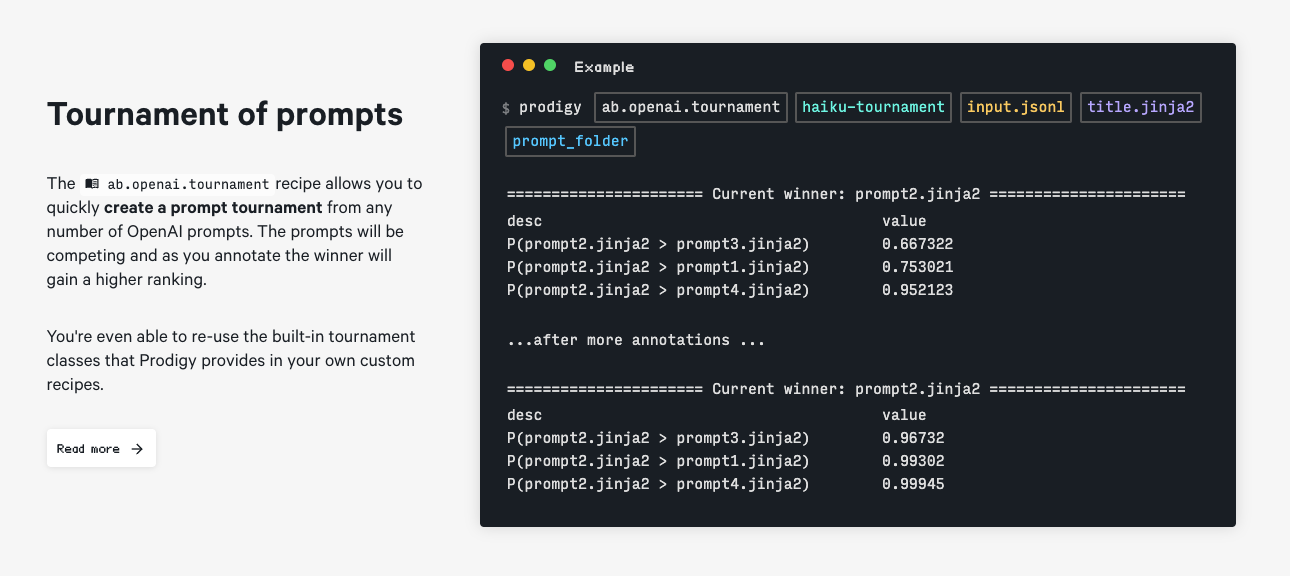

- A new, exciting workflow for prompt engineering which allows you to compare more than 2 prompts in a tournament-like fashion. You can find out details on the algorithm and the workflow itself here.

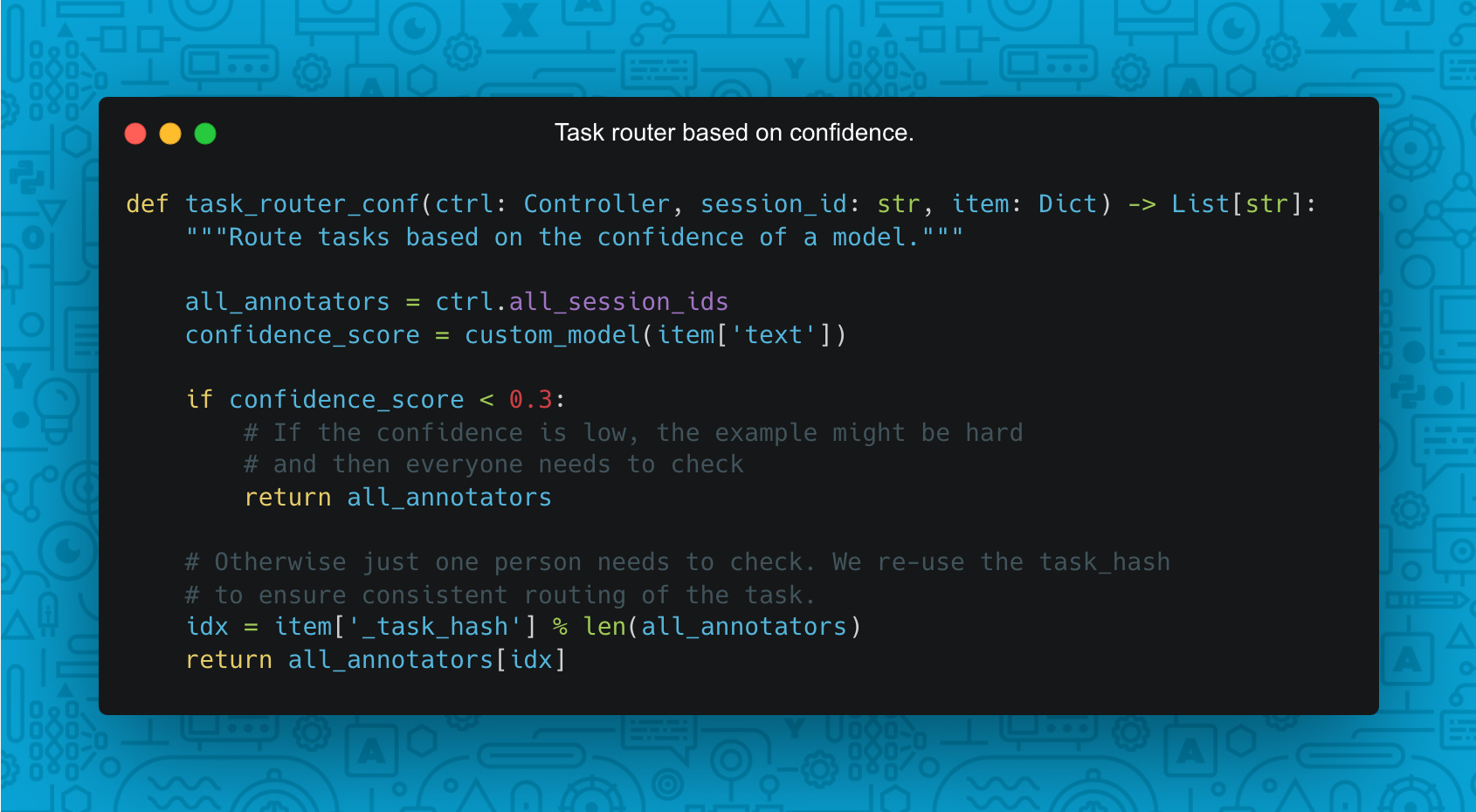

- Extended, fully customizable support for multi-annotator workflows. You can now customize what should happen when a new annotator joins an ongoing annotation project, how tasks should be allocated between existing annotators, and what should happen when one annotator finishes their assigned tasks before others. For common use-cases, you can use the options

feed_overlap,annotations_per_taskandallow_work_stealing(see the updated configuration documentation for details).

Custom recipes can specifysession_factoryandtask_routercallbacks for full control. For example, you can now route tasks based on model confidence.

The full guide on task routing can be found here.

These changes required significant reimplementation of the Controller class. We think we've tested it quite thoroughly, but if something seems wrong, please don't hesitate to report.

- New

StreamandSourceclasses. Previously, Prodigy recipes would return an opaque generator of tasks (thestream) in their components. This was convenient in some ways, but limited in others. One notable limitation was that if you're reading source data from a file and then you have some function that produces tasks from it, the information about that underlying data source would be lost, making it difficult to provide progress feedback. The newStreamclass works as a generator, so it's fully compatible with existing recipes. But it's also a class, and it can be initialised with aSourceobject that tracks progress through some data being read, so that progress callbacks can easily ask how far along you are. With this refactor we have prepared the ground for better progress feedback available in our next alpha soon. We also took the opportunity to improve some of our data readers, and to add a newSourceclass for Parquet files. Please let us know if there are moreSourceclasses you'd like to request.

To install the latest alpha version run:

pip install --pre prodigy -f https://XXXX-XXXX-XXXX-XXXX@download.prodi.gy

Please note that this release supports macOS, Linux and Windows and can be installed on Python 3.8 and above.

All the links to the documentation come from our alpha docs, which are available here.

We would love you to take the task router and the OpenAI recipes for a test drive! Looking forward to any feedback you might have!