perfect I can increase my score by segmenting the treatment in 2. Thanks

But I have a problem with the spancat. I have a score that remains at 0 with more than 585 training docs and 194 evaluation docs. I tried with the "standard" model and using "camembert-base".

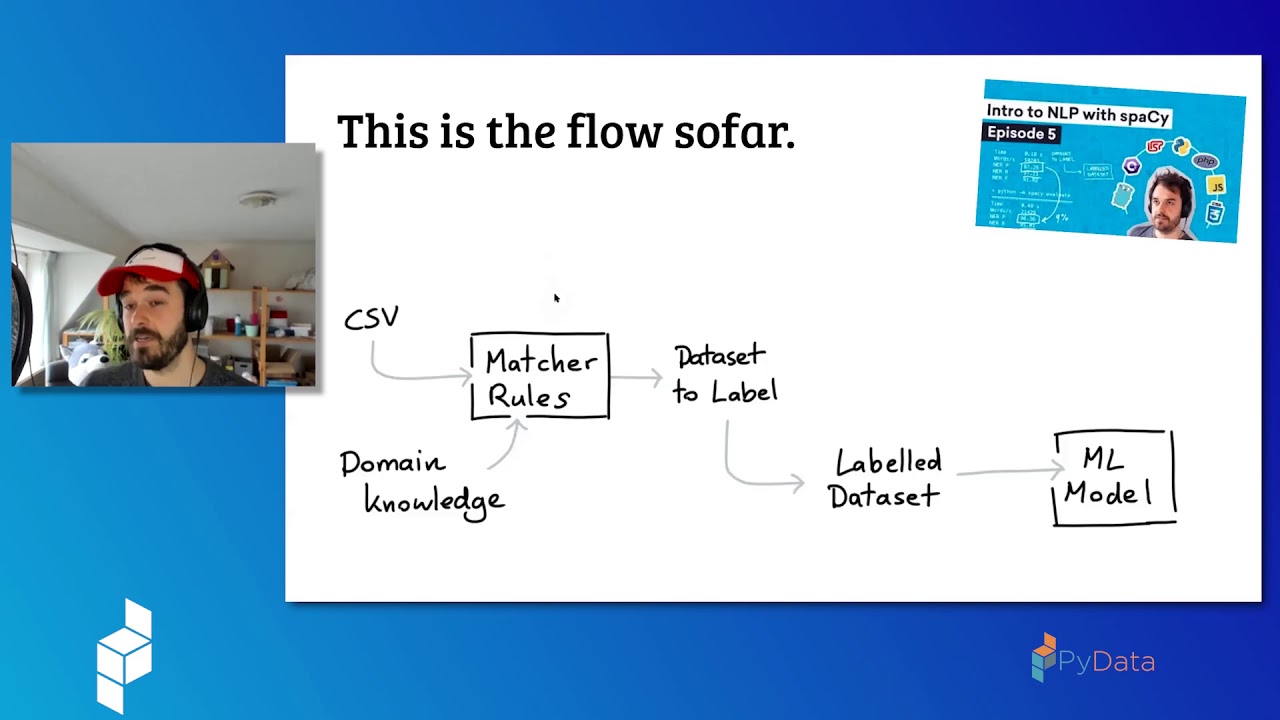

While in the video tutorial :

It manages to form already a model with about 60% of scoring with only about 20 samples. And if I reproduce what he does with my datas and the delta that the language is French and not English. I still get 0

Here is the 2 command I test when annotating:

python3 -m prodigy spans.manual train blank:fr data/train.jsonl --label "Intentions exprimées"

python3 -m prodigy spans.manual train fr_dep_news_trf data/train.jsonl --label "Intentions exprimées"

Here is what I get after analysis of the data via "debug-data":

============================ Data file validation ============================

proxies= None

token= None

f= vocab_file file_path= https://huggingface.co/camembert-base/resolve/main/sentencepiece.bpe.model

f= tokenizer_file file_path= https://huggingface.co/camembert-base/resolve/main/tokenizer.json

f= added_tokens_file file_path= https://huggingface.co/camembert-base/resolve/main/added_tokens.json

Cannot find the requested files in the cached path and outgoing traffic has been disabled. To enable model look-ups and downloads online, set 'local_files_only' to False.

f= special_tokens_map_file file_path= https://huggingface.co/camembert-base/resolve/main/special_tokens_map.json

Cannot find the requested files in the cached path and outgoing traffic has been disabled. To enable model look-ups and downloads online, set 'local_files_only' to False.

f= tokenizer_config_file file_path= https://huggingface.co/camembert-base/resolve/main/tokenizer_config.json

Cannot find the requested files in the cached path and outgoing traffic has been disabled. To enable model look-ups and downloads online, set 'local_files_only' to False.

unres= ['added_tokens_file', 'special_tokens_map_file', 'tokenizer_config_file']

files= {'vocab_file': '/home/cache/transformers/dbcb433aefd8b1a136d029fe2205a5c58a6336f8d3ba20e6c010f4d962174f5f.160b145acd37d2b3fd7c3694afcf4c805c2da5fd4ed4c9e4a23985e3c52ee452', 'tokenizer_file': '/home/cache/transformers/84c442cc6020fc04ce266072af54b040f770850f629dd86c5951dbc23ac4c0dd.8fd2f10f70e05e6bf043e8a6947f6cdf9bb5dc937df6f9210a5c0ba8ee48e959'}

Some weights of the model checkpoint at camembert-base were not used when initializing CamembertModel: ['lm_head.dense.weight', 'lm_head.dense.bias', 'lm_head.bias', 'lm_head.layer_norm.weight', 'lm_head.decoder.weight', 'lm_head.layer_norm.bias']

- This IS expected if you are initializing CamembertModel from the checkpoint of a model trained on another task or with another architecture (e.g. initializing a BertForSequenceClassification model from a BertForPreTraining model).

- This IS NOT expected if you are initializing CamembertModel from the checkpoint of a model that you expect to be exactly identical (initializing a BertForSequenceClassification model from a BertForSequenceClassification model).

✔ Pipeline can be initialized with data

✔ Corpus is loadable

=============================== Training stats ===============================

Language: fr

Training pipeline: transformer, spancat

585 training docs

194 evaluation docs

✔ No overlap between training and evaluation data

⚠ Low number of examples to train a new pipeline (585)

============================== Vocab & Vectors ==============================

ℹ 105167 total word(s) in the data (5067 unique)

⚠ 859 misaligned tokens in the training data

⚠ 292 misaligned tokens in the dev data

ℹ No word vectors present in the package

============================ Span Categorization ============================

Spans Key Labels

--------- ------------------------

sc {'Intentions exprimées'}

huggingface/tokenizers: The current process just got forked, after parallelism has already been used. Disabling parallelism to avoid deadlocks...

To disable this warning, you can either:

- Avoid using `tokenizers` before the fork if possible

- Explicitly set the environment variable TOKENIZERS_PARALLELISM=(true | false)

⚠ No examples for texts WITHOUT new label 'Intentions exprimées'

huggingface/tokenizers: The current process just got forked, after parallelism has already been used. Disabling parallelism to avoid deadlocks...

To disable this warning, you can either:

- Avoid using `tokenizers` before the fork if possible

- Explicitly set the environment variable TOKENIZERS_PARALLELISM=(true | false)

ℹ Span characteristics for spans_key 'sc'

ℹ SD = Span Distinctiveness, BD = Boundary Distinctiveness

Span Type Length SD BD

-------------------- ------ ---- ----

Intentions exprimées 9.84 0.66 0.87

-------------------- ------ ---- ----

Wgt. Average 9.84 0.66 0.87

ℹ Over 90% of spans have lengths of 1 -- 21 (min=1, max=57). The most

common span lengths are: 2 (1.47%), 3 (2.01%), 4 (4.28%), 5 (6.02%), 6 (7.62%),

7 (7.35%), 8 (7.09%), 9 (7.35%), 10 (7.62%), 11 (7.35%), 12 (5.61%), 13 (4.68%),

14 (4.68%), 15 (2.67%), 16 (2.67%), 17 (2.54%), 18 (3.21%), 19 (2.27%), 20

(1.87%), 21 (2.54%). If you are using the n-gram suggester, note that omitting

infrequent n-gram lengths can greatly improve speed and memory usage.

⚠ Spans may not be distinct from the rest of the corpus

⚠ Boundary tokens are not distinct from the rest of the corpus

✔ Good amount of examples for all labels

================================== Summary ==================================

✔ 4 checks passed

⚠ 6 warnings

My results with camembert-base :

=========================== Initializing pipeline ===========================

proxies= None

token= None

f= vocab_file file_path= https://huggingface.co/camembert-base/resolve/main/sentencepiece.bpe.model

f= tokenizer_file file_path= https://huggingface.co/camembert-base/resolve/main/tokenizer.json

f= added_tokens_file file_path= https://huggingface.co/camembert-base/resolve/main/added_tokens.json

Cannot find the requested files in the cached path and outgoing traffic has been disabled. To enable model look-ups and downloads online, set 'local_files_only' to False.

f= special_tokens_map_file file_path= https://huggingface.co/camembert-base/resolve/main/special_tokens_map.json

Cannot find the requested files in the cached path and outgoing traffic has been disabled. To enable model look-ups and downloads online, set 'local_files_only' to False.

f= tokenizer_config_file file_path= https://huggingface.co/camembert-base/resolve/main/tokenizer_config.json

Cannot find the requested files in the cached path and outgoing traffic has been disabled. To enable model look-ups and downloads online, set 'local_files_only' to False.

unres= ['added_tokens_file', 'special_tokens_map_file', 'tokenizer_config_file']

files= {'vocab_file': '/home/cache/transformers/dbcb433aefd8b1a136d029fe2205a5c58a6336f8d3ba20e6c010f4d962174f5f.160b145acd37d2b3fd7c3694afcf4c805c2da5fd4ed4c9e4a23985e3c52ee452', 'tokenizer_file': '/home/cache/trans

formers/84c442cc6020fc04ce266072af54b040f770850f629dd86c5951dbc23ac4c0dd.8fd2f10f70e05e6bf043e8a6947f6cdf9bb5dc937df6f9210a5c0ba8ee48e959'}

✔ Initialized pipeline

============================= Training pipeline =============================

ℹ Pipeline: ['transformer', 'spancat']

ℹ Initial learn rate: 0.0

E # LOSS TRANS... LOSS SPANCAT SPANS_SC_F SPANS_SC_P SPANS_SC_R SCORE

--- ------ ------------- ------------ ---------- ---------- ---------- ------

0 0 1131.02 147.16 0.07 0.04 4.28 0.00

3 200 114324.40 16067.41 0.00 0.00 0.00 0.00

6 400 0.00 97.01 0.00 0.00 0.00 0.00

9 600 0.00 98.00 0.00 0.00 0.00 0.00

13 800 0.00 100.00 0.00 0.00 0.00 0.00

16 1000 0.01 106.01 0.00 0.00 0.00 0.00

19 1200 0.00 93.00 0.00 0.00 0.00 0.00

23 1400 0.00 106.00 0.00 0.00 0.00 0.00

26 1600 0.00 103.99 0.00 0.00 0.00 0.00

✔ Saved pipeline to output directory

and my config file.

[paths]

train = tmp/train_for_spacy/train.spacy

dev = tmp/train_for_spacy/dev.spacy

vectors = null

init_tok2vec = null

[system]

gpu_allocator = "pytorch"

seed = 0

[nlp]

lang = "fr"

pipeline = ["transformer","spancat"]

batch_size = 32

disabled = []

before_creation = null

after_creation = null

after_pipeline_creation = null

tokenizer = {"@tokenizers":"spacy.Tokenizer.v1"}

[components]

[components.spancat]

factory = "spancat"

max_positive = null

scorer = {"@scorers":"spacy.spancat_scorer.v1"}

spans_key = "sc"

threshold = 0.5

[components.spancat.model]

@architectures = "spacy.SpanCategorizer.v1"

[components.spancat.model.reducer]

@layers = "spacy.mean_max_reducer.v1"

hidden_size = 128

[components.spancat.model.scorer]

@layers = "spacy.LinearLogistic.v1"

nO = null

nI = null

[components.spancat.model.tok2vec]

@architectures = "spacy-transformers.TransformerListener.v1"

grad_factor = 1.0

pooling = {"@layers":"reduce_mean.v1"}

upstream = "*"

[components.spancat.suggester]

@misc = "spacy.ngram_suggester.v1"

sizes = [1,2,3]

[components.transformer]

factory = "transformer"

max_batch_items = 4096

set_extra_annotations = {"@annotation_setters":"spacy-transformers.null_annotation_setter.v1"}

[components.transformer.model]

@architectures = "spacy-transformers.TransformerModel.v3"

name = "camembert-base"

mixed_precision = false

[components.transformer.model.get_spans]

@span_getters = "spacy-transformers.strided_spans.v1"

window = 128

stride = 96

[components.transformer.model.grad_scaler_config]

[components.transformer.model.tokenizer_config]

use_fast = true

[components.transformer.model.transformer_config]

[corpora]

[corpora.dev]

@readers = "spacy.Corpus.v1"

path = ${paths.dev}

max_length = 0

gold_preproc = false

limit = 0

augmenter = null

[corpora.train]

@readers = "spacy.Corpus.v1"

path = ${paths.train}

max_length = 0

gold_preproc = false

limit = 0

augmenter = null

[training]

accumulate_gradient = 3

dev_corpus = "corpora.dev"

train_corpus = "corpora.train"

seed = ${system.seed}

gpu_allocator = ${system.gpu_allocator}

dropout = 0.1

patience = 1600

max_epochs = 0

max_steps = 20000

eval_frequency = 200

frozen_components = []

annotating_components = []

before_to_disk = null

[training.batcher]

@batchers = "spacy.batch_by_padded.v1"

discard_oversize = true

size = 2000

buffer = 256

get_length = null

[training.logger]

@loggers = "spacy.ConsoleLogger.v1"

progress_bar = false

[training.optimizer]

@optimizers = "Adam.v1"

beta1 = 0.9

beta2 = 0.999

L2_is_weight_decay = true

L2 = 0.01

grad_clip = 1.0

use_averages = false

eps = 0.00000001

[training.optimizer.learn_rate]

@schedules = "warmup_linear.v1"

warmup_steps = 250

total_steps = 20000

initial_rate = 0.00005

[training.score_weights]

spans_sc_f = 1.0

spans_sc_p = 0.0

spans_sc_r = 0.0

[pretraining]

[initialize]

vectors = ${paths.vectors}

init_tok2vec = ${paths.init_tok2vec}

vocab_data = null

lookups = null

before_init = null

after_init = null

[initialize.components]

[initialize.tokenizer]

I confess I don't understand where my problem comes from because I am in a case quite close to the tutorial. I am even in a simpler case because I have only one label.

Moreover the spans are often easily identifiable because they often start with :

"Je souhaiterais ..."

"J'aimerais ...."

"Je voudrais ..."

I don't understand why the template can't capture that.

I can send a sample of data if it helps (just tell me where). But right now I admit to being pretty desperate.