Sharing this approach in response to @mv3's question in a previous post:

My NLP project leverages a structured, three-stage Named Entity Recognition (NER) approach, using Prodigy for annotating job postings.

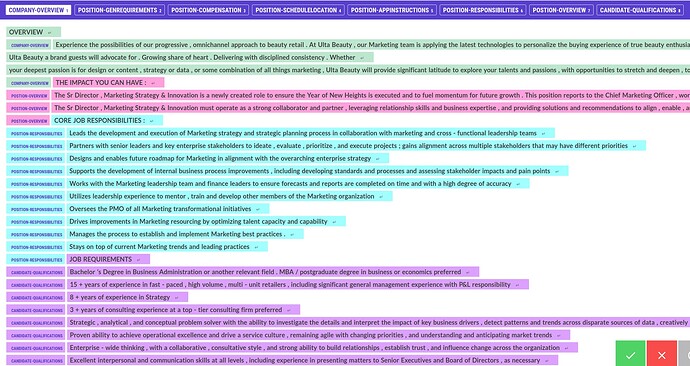

The first stage involves training an NER model to categorize broad sections of the job postings. This is achieved by manually annotating large spans of text—such as entire sentences or paragraphs—within Prodigy, using high-level labels that capture the general content of these sections. For instance, identifying distinct segments like 'Requirements', 'Responsibilities', or 'Qualifications'. This sets the stage for a more detailed analysis.

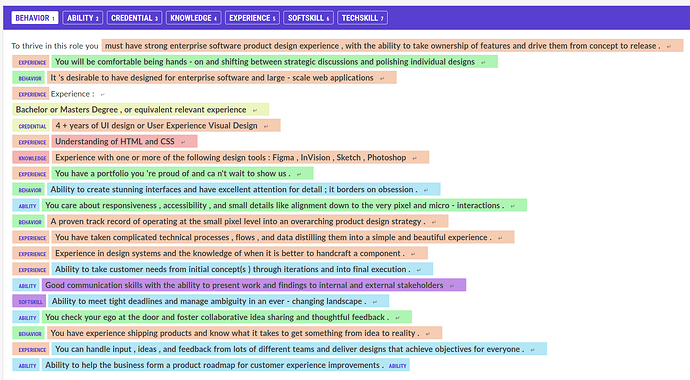

The second stage involves applying intermediate-level labels to the text. These labels are 'Behavior', 'Ability', 'Credential', 'Knowledge', 'Experience', 'Softskill', and 'Techskill'. At this level, I'm still working within a relatively broad scope but starting to zoom in on the specifics of the job description. This is where the model learns to distinguish between the types of qualifications and traits sought by employers.

The third and final stage is where the model gets even more specific. Here, I apply granular labels such as 'hardware', 'software', 'degree', 'certification', and 'years of experience'. These labels delve into the particulars within the previously identified intermediate sections. By training the model to understand and identify these detailed entities, I can extract very specific information from the job postings.

This multi-tiered approach allows the NER model to process complex job postings in a layered fashion, improving its accuracy and utility. The initial broad classification helps in managing the complexity by segmenting the data, and the subsequent, more detailed labeling captures the fine-grained information necessary for a comprehensive analysis.

Stage 1

Stage 2 (Now within Candidate-Qualifications)

Stage 3

Currently in development