Hello,

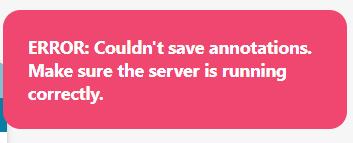

I’m running my first textcat.teach recipe . When progress got to 50% (edit: I later found this occured at any percentage - unpredictably), I got the following chain of errors and was also unable to save from the UI:

19:12:16 - Exception when serving /give_answers

Traceback (most recent call last):

File "C:\Users\User\Anaconda3\lib\site-packages\waitress\channel.py", line 338, in service

task.service()

File "C:\Users\User\Anaconda3\lib\site-packages\waitress\task.py", line 169, in service

self.execute()

File "C:\Users\User\Anaconda3\lib\site-packages\waitress\task.py", line 399, in execute

app_iter = self.channel.server.application(env, start_response)

File "hug\api.py", line 424, in hug.api.ModuleSingleton.__call__.api_auto_instantiate

File "C:\Users\User\Anaconda3\lib\site-packages\falcon\api.py", line 244, in __call__

responder(req, resp, **params)

File "hug\interface.py", line 734, in hug.interface.HTTP.__call__

File "hug\interface.py", line 709, in hug.interface.HTTP.__call__

File "hug\interface.py", line 649, in hug.interface.HTTP.call_function

File "hug\interface.py", line 100, in hug.interface.Interfaces.__call__

File "C:\Users\User\Anaconda3\lib\site-packages\prodigy\app.py", line 101, in give_answers

controller.receive_answers(answers)

File "cython_src\prodigy\core.pyx", line 98, in prodigy.core.Controller.receive_answers

File "cython_src\prodigy\util.pyx", line 277, in prodigy.util.combine_models.update

File "cython_src\prodigy\models\textcat.pyx", line 169, in prodigy.models.textcat.TextClassifier.update

KeyError: 'label'

Any Ideas?

Thanks