Thanks for the pointers in your earlier reply! I’ve tried to implement a pages + blocks workflow using a toy example. I also used ChatGPT to help me draft the recipe.

Data (dataset/catalog.jsonl):

{"catalog_id":"CAT001","curator":"ops-team","catalog_items":[{"seq":1,"sku":"A-100","title":"Acme SuperWidget 3000","original_text":"super widget v3k","suggested_text":"Acme SuperWidget 3000"},{"seq":2,"sku":"B-200","title":"BoltMaster Kit (42pc)","original_text":"bolts kit 42 pieces","suggested_text":"BoltMaster Kit, 42-Piece"}]}

Recipe (recipe.py):

import prodigy

from prodigy.components.loaders import JSONL

from prodigy.util import set_hashes

from typing import Iterable, Dict, List

def _html_catalog_table(items: List[Dict], highlight_seq: str = None) -> str:

"""Context table with optional highlight for the current row."""

rows = []

for it in items:

seq = it.get("seq", "")

sku = it.get("sku", "")

title = it.get("title", "")

orig = it.get("original_text", "")

sug = it.get("suggested_text", "")

highlight = (str(seq) == str(highlight_seq)) if highlight_seq is not None else False

row_style = "background-color:#fff7cc;" if highlight else "" # pale yellow

rows.append(

f"<tr style='{row_style}'>"

f"<td style='padding:6px 8px; border-bottom:1px solid #eee'>{seq}</td>"

f"<td style='padding:6px 8px; border-bottom:1px solid #eee'>{sku}</td>"

f"<td style='padding:6px 8px; border-bottom:1px solid #eee'>{title}</td>"

f"<td style='padding:6px 8px; border-bottom:1px solid #eee'>{orig}</td>"

f"<td style='padding:6px 8px; border-bottom:1px solid #eee'>{sug}</td>"

f"</tr>"

)

header = (

"<table style='width:100%; border-collapse:collapse; font-size:14px'>"

"<thead>"

"<tr style='text-align:left'>"

"<th style='padding:6px 8px; border-bottom:2px solid #ccc; width:60px'>Seq</th>"

"<th style='padding:6px 8px; border-bottom:2px solid #ccc; width:120px'>SKU</th>"

"<th style='padding:6px 8px; border-bottom:2px solid #ccc'>Title</th>"

"<th style='padding:6px 8px; border-bottom:2px solid #ccc'>Original</th>"

"<th style='padding:6px 8px; border-bottom:2px solid #ccc'>Suggested</th>"

"</tr>"

"</thead><tbody>"

)

return header + "".join(rows) + "</tbody></table>"

def _iter_tasks(source: str) -> Iterable[Dict]:

"""

One parent task per catalog (pages). Each page = one item:

- Show full table as context with current row highlighted

- Editable text field with the current suggestion pre-filled

"""

for cat in JSONL(source):

items = cat.get("catalog_items") or []

if not isinstance(items, list) or not items:

continue

catalog_id = cat.get("catalog_id", "")

curator = cat.get("curator", "")

pages: List[Dict] = []

for it in items:

seq = it.get("seq", "")

sku = it.get("sku", "")

title = it.get("title", "")

orig = it.get("original_text", "") or ""

sug = it.get("suggested_text", "") or ""

context_table_html = _html_catalog_table(items, highlight_seq=seq)

page_html = (

"<div style='text-align:left; width:100%'>"

f"<div style='margin:0 0 8px 0; color:#666'>"

f"<strong>Catalog:</strong> {catalog_id}"

f" | "

f"<strong>Curator:</strong> {curator}"

f"</div>"

f"{context_table_html}"

"<hr style='margin:12px 0; border:none; border-top:1px solid #ddd'/>"

f"<div style='padding:6px 0; color:#666'><strong>Item Number:</strong> {seq}</div>"

f"<div style='padding:6px 0'><strong>SKU:</strong> {sku}</div>"

f"<div style='padding:6px 0'><strong>Title:</strong> {title}</div>"

f"<div style='padding:6px 0'><strong>Original Text:</strong> {orig}</div>"

"</div>"

)

page = {

"view_id": "blocks",

"config": {

"blocks": [

{"view_id": "html", "html": page_html},

{

"view_id": "text_input",

"field_id": "edited_text",

"field_label": "Normalized Text",

"placeholder": "Enter the normalized/catalog-ready text…",

"rows": 2

},

]

},

"edited_text": sug, # prefill

"text": sug or f"{catalog_id} item {seq}",# ensure hashable

# store meta-like data under a non-special key to avoid footer chips

"task_info": {

"catalog_id": catalog_id,

"seq": seq,

"sku": sku,

"title": title,

"original_text": orig,

"suggested_text": sug,

},

}

page = set_hashes(page, input_keys=("text",))

pages.append(page)

if not pages:

continue

parent = {

"view_id": "pages",

"pages": pages,

"text": f"Catalog {catalog_id}",

"task_info": {

"catalog_id": catalog_id,

"curator": curator,

"num_items": len(pages),

},

}

parent = set_hashes(parent, input_keys=("text",))

yield parent

@prodigy.recipe(

"catalog.normalize_review",

dataset=("Dataset to save annotations", "positional", None, str),

source=("Path to JSONL catalog file", "positional", None, str),

)

def catalog_normalize_review(dataset: str, source: str):

"""

Multi-annotator, no-overlap review/edit workflow:

- One catalog per task (pages), one page per item.

- Each catalog routed to a single session (no overlap).

- Record session user as `reviewer`.

"""

stream = _iter_tasks(source)

def before_db(examples):

for eg in examples:

session = (

eg.get("session_id")

or eg.get("_session_id")

or eg.get("session")

or ""

)

for p in eg.get("pages") or []:

orig = (p.get("task_info", {}) or {}).get("suggested_text", "")

edited_val = p.get("edited_text", "")

final = edited_val if isinstance(edited_val, str) and edited_val.strip() != "" else orig

p["final_text"] = final

p["edited"] = (final != orig)

p["reviewer"] = session

return examples

return {

"dataset": dataset,

"stream": stream,

"view_id": "pages",

"before_db": before_db,

"config": {

"lang": "en",

"auto_count_stream": True,

"buttons": ["accept", "reject", "ignore"],

# route whole catalogs to a single annotator

"feed_overlap": False,

"exclude_by": "input",

# hide meta chips just in case

"show_meta": False,

# (optional) comment out the keymap section; left out to keep MRE minimal

# If needed, demonstrate focus bug persists even without keybindings.

},

}

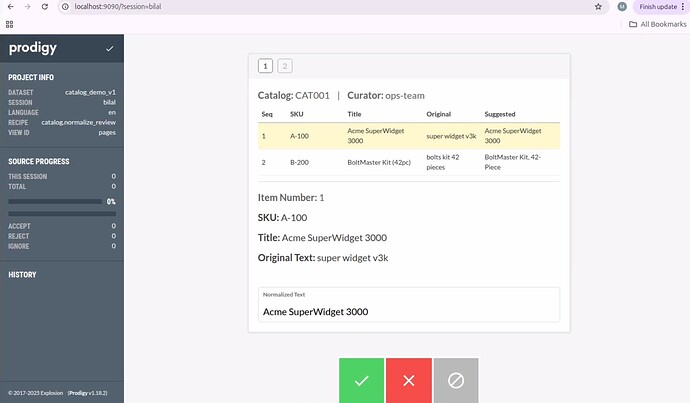

I’m getting the desired UI (screenshot below). The Normalized Text field is meant for the reviewer to edit whenever they feel the suggested description could be improved:

Problem: In the text_input block, the user loses focus after one keystroke. The annotator must click back into the field to continue typing.

Questions:

- Is there a workaround to keep focus so annotators can type normally?

- Also, could you please let me know if I’m doing something in a way that’s not the Prodigy way, and if there are improvements you’d recommend for this workflow design?

Thanks again,

Bilal