Hi,

Following the ‘First steps’, I created the model, and created the spacy package from it, then install the model and linked to custom name.

However, when trying to load with spacy.load(), or use it as a basis for ner.make-gold, I receive following error message (it depends on the language model used for training):

OSError: [E050] Can’t find model ‘en_core_web_lg.vectors’. It doesn’t seem to be a shortcut link, a Python package or a valid path to a data directory.

However, after loading the language model (in this case, the ‘en_core_web_lg’) before using my custom model, everything goes fine.

I am wondering if there is a way to create the spacy package model to use it without need to load the language model first.

Hi! Could you share the meta.json of your custom model package? And which version of spaCy are you on? (You can find out by running the python -m spacy info command.)

Hi Ines,

You were right, that was caused by wrong meta.json, which was generated after spacy package ... --create-meta, without vector names: "vectors":{ "width":XYZ, "vectors":XYZ, "keys":XYZ} . I compared that with meta.json automatically generated from prodigy textcat.batch-train ... en_core_web_md where vector info is stored in following format: "vectors":{... , "name":"en_core_web_md.vectors"}

I just copied automatically generated meta.json, changed model name to my unique and was able to load my custom model with vectors inside prodigy commands

Spacy version: 2.0.16 - it looks like this is problem with spacy package --create-meta command

Prodigy version: 1.6.1

Thanks for following up! I think this points to an issue in spaCy, especially in the package command. We’ll get this fixed.

@ines, @honnibal Hi I have the same issue. I was able to resolve the problem by updating vector name on meta.json file, but surprisingly once I shutdown my Jupiter notebook and load a new one, It gives me an error and asking for a new vector name.

OSError: [E050] Can't find model 'en_model.vectors'. It doesn't seem to be a shortcut link, a Python package or a valid path to a data directory.

OSError: [E050] Can't find model 'en_vectors_web_lg.vectors'. It doesn't seem to be a shortcut link, a Python package or a valid path to a data directory.

I'm good to go if I update the meta data with that name, but once I load a new notebook it asks for the other name.

I got ner and textcat models in my pipeline, which each of them train with different base model and wor2vec vectors

---------------------------------------------------------------------------

OSError Traceback (most recent call last)

<ipython-input-1-0694cd8e96e5> in <module>

7 warnings.filterwarnings('ignore')

8

----> 9 nlp = spacy.load('en_nlp_model') #en_nlp_model

10

11

/opt/anaconda3/envs/spacy/lib/python3.7/site-packages/spacy/__init__.py in load(name, **overrides)

28 if depr_path not in (True, False, None):

29 deprecation_warning(Warnings.W001.format(path=depr_path))

---> 30 return util.load_model(name, **overrides)

31

32

/opt/anaconda3/envs/spacy/lib/python3.7/site-packages/spacy/util.py in load_model(name, **overrides)

162 return load_model_from_link(name, **overrides)

163 if is_package(name): # installed as package

--> 164 return load_model_from_package(name, **overrides)

165 if Path(name).exists(): # path to model data directory

166 return load_model_from_path(Path(name), **overrides)

/opt/anaconda3/envs/spacy/lib/python3.7/site-packages/spacy/util.py in load_model_from_package(name, **overrides)

183 """Load a model from an installed package."""

184 cls = importlib.import_module(name)

--> 185 return cls.load(**overrides)

186

187

/opt/anaconda3/envs/spacy/lib/python3.7/site-packages/en_nlp_model/__init__.py in load(**overrides)

10

11 def load(**overrides):

---> 12 return load_model_from_init_py(__file__, **overrides)

/opt/anaconda3/envs/spacy/lib/python3.7/site-packages/spacy/util.py in load_model_from_init_py(init_file, **overrides)

226 if not model_path.exists():

227 raise IOError(Errors.E052.format(path=path2str(data_path)))

--> 228 return load_model_from_path(data_path, meta, **overrides)

229

230

/opt/anaconda3/envs/spacy/lib/python3.7/site-packages/spacy/util.py in load_model_from_path(model_path, meta, **overrides)

209 component = nlp.create_pipe(factory, config=config)

210 nlp.add_pipe(component, name=name)

--> 211 return nlp.from_disk(model_path, exclude=disable)

212

213

/opt/anaconda3/envs/spacy/lib/python3.7/site-packages/spacy/language.py in from_disk(self, path, exclude, disable)

945 # Convert to list here in case exclude is (default) tuple

946 exclude = list(exclude) + ["vocab"]

--> 947 util.from_disk(path, deserializers, exclude)

948 self._path = path

949 return self

/opt/anaconda3/envs/spacy/lib/python3.7/site-packages/spacy/util.py in from_disk(path, readers, exclude)

652 # Split to support file names like meta.json

653 if key.split(".")[0] not in exclude:

--> 654 reader(path / key)

655 return path

656

/opt/anaconda3/envs/spacy/lib/python3.7/site-packages/spacy/language.py in <lambda>(p, proc)

940 continue

941 deserializers[name] = lambda p, proc=proc: proc.from_disk(

--> 942 p, exclude=["vocab"]

943 )

944 if not (path / "vocab").exists() and "vocab" not in exclude:

pipes.pyx in spacy.pipeline.pipes.Pipe.from_disk()

/opt/anaconda3/envs/spacy/lib/python3.7/site-packages/spacy/util.py in from_disk(path, readers, exclude)

652 # Split to support file names like meta.json

653 if key.split(".")[0] not in exclude:

--> 654 reader(path / key)

655 return path

656

pipes.pyx in spacy.pipeline.pipes.Pipe.from_disk.load_model()

pipes.pyx in spacy.pipeline.pipes.TextCategorizer.Model()

/opt/anaconda3/envs/spacy/lib/python3.7/site-packages/spacy/_ml.py in Tok2Vec(width, embed_size, **kwargs)

320 if not USE_MODEL_REGISTRY_TOK2VEC:

321 # Preserve prior tok2vec for backwards compat, in v2.2.2

--> 322 return _legacy_tok2vec.Tok2Vec(width, embed_size, **kwargs)

323 pretrained_vectors = kwargs.get("pretrained_vectors", None)

324 cnn_maxout_pieces = kwargs.get("cnn_maxout_pieces", 3)

/opt/anaconda3/envs/spacy/lib/python3.7/site-packages/spacy/ml/_legacy_tok2vec.py in Tok2Vec(width, embed_size, **kwargs)

42 prefix, suffix, shape = (None, None, None)

43 if pretrained_vectors is not None:

---> 44 glove = StaticVectors(pretrained_vectors, width, column=cols.index(ID))

45

46 if subword_features:

/opt/anaconda3/envs/spacy/lib/python3.7/site-packages/thinc/neural/_classes/static_vectors.py in __init__(self, lang, nO, drop_factor, column)

41 # same copy of spaCy if they're deserialised.

42 self.lang = lang

---> 43 vectors = self.get_vectors()

44 self.nM = vectors.shape[1]

45 self.drop_factor = drop_factor

/opt/anaconda3/envs/spacy/lib/python3.7/site-packages/thinc/neural/_classes/static_vectors.py in get_vectors(self)

53

54 def get_vectors(self):

---> 55 return get_vectors(self.ops, self.lang)

56

57 def begin_update(self, ids, drop=0.0):

/opt/anaconda3/envs/spacy/lib/python3.7/site-packages/thinc/extra/load_nlp.py in get_vectors(ops, lang)

24 key = (ops.device, lang)

25 if key not in VECTORS:

---> 26 nlp = get_spacy(lang)

27 VECTORS[key] = nlp.vocab.vectors.data

28 return VECTORS[key]

/opt/anaconda3/envs/spacy/lib/python3.7/site-packages/thinc/extra/load_nlp.py in get_spacy(lang, **kwargs)

12

13 if lang not in SPACY_MODELS:

---> 14 SPACY_MODELS[lang] = spacy.load(lang, **kwargs)

15 return SPACY_MODELS[lang]

16

/opt/anaconda3/envs/spacy/lib/python3.7/site-packages/spacy/__init__.py in load(name, **overrides)

28 if depr_path not in (True, False, None):

29 deprecation_warning(Warnings.W001.format(path=depr_path))

---> 30 return util.load_model(name, **overrides)

31

32

/opt/anaconda3/envs/spacy/lib/python3.7/site-packages/spacy/util.py in load_model(name, **overrides)

167 elif hasattr(name, "exists"): # Path or Path-like to model data

168 return load_model_from_path(name, **overrides)

--> 169 raise IOError(Errors.E050.format(name=name))

170

171

OSError: [E050] Can't find model 'en_vectors_web_lg.vectors'. It doesn't seem to be a shortcut link, a Python package or a valid path to a data directory.

spaCy version 2.2.4

Location /opt/anaconda3/envs/spacy/lib/python3.7/site-packages/spacy

Platform Darwin-19.3.0-x86_64-i386-64bit

Python version 3.7.4

By in your pipeline, do you mean within the same nlp object? That's currently not possible – if components were trained with different base models and different vectors, they can't be part of the same nlp pipeline, because there can only be one set of vectors that provides the features.

@ines Yes, the same nlp.

I can create a custom pipeline by adding all those NER and Textcat models and run the model with no problem. When I create a Python package and load it again, I get those error messages.

I can fix it by changing the meta data, but once I open a new Jupyter notebook, it asks for another vectors name.

It's definitely confusing, but I think you ended up in a weird state here where the vectors in the meta.json don't match the vectors in the individual component cfg (maybe have a look at that in the model data directories as well) – which makes sense, because they did indeed use different vectors.

So even if you do manage to trick spaCy into thinking it's all the same vectors, you might still see different results for the components trained with different vectors. Maybe it's easiest to start fresh and save out the pipelines separately, or retrain so that all components use the same base model.

Hi Ines

I've run command line spacy train for NER with base model as nlp = spacy.load("en_core_web_lg") but it sends me the error like OSError: [E050] Can't find model 'nlp'. It doesn't seem to be a shortcut link, a Python package or a valid path to a data directory. Even though the model has been loaded successfully. I'm doing all in Googe colab.

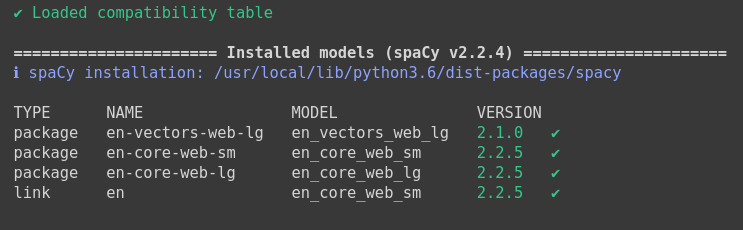

After running spacy validate I got this:

My workflow is like that:

base model : en_core_web_lg,

vector: en_vector_web_lg

pretrained: same model you've used in food ingredients

Thanks

How are you loading the model? The error message mirrors exactly what's passed into the call to spacy.load() so it sounds like somewhere in your code, you're calling spacy.load("nlp")?

I am calling as spacy.load("en_vectors_web_lg"). It works in google colab. But when I run the same code into my local terminal or jupyter notebook it throws me the following error:

Also, I have run this spacy validate

Please help

Thanks

Hi @salhasalman.

This thread is already a bit old and possibly outdated.

If you're running into spacy-specific issues with loading models, we'll be able to help you better if you open a discussion thread at Discussions · explosion/spaCy · GitHub, and provide a minimal code snippet that reproduces the error and/or paste the full error stack trace.