Say I have a set of 10 images to be annotated.

During annotation, can a user jump to say, image_id 7?

This would be helpful if we are annotating a PDF and we want to jump ahead to a specific section.

Welcome to the forum @tue.truong10!

Prodigy has been designed to stream tasks in generator like fashion mostly to avoid having to load entire datasets in memory. It makes the annotation experience snappy but it also means that it's not straightforward to go back and forth in the input stream.

How do annotators know they want to go this particular example? Do they know the particular example's ID or similar or they need to skim through examples that are in between? If they need to skim through perhaps quickly hitting the ignore button for the examples they do not want to annotate would be sufficient?

Other than that, if you have a way in which the annotator can identify the example they want to jump to, you could potentially implement a custom event that would reset the stream so that the first example is the one the annotator wants to jump to. This however would change the stream for all the annotators so it wouldn't be applicable for multi-annotator scenarios.

Hitting ignore is precisely what we're doing right now. But it's tedious and many times we need to go back and forth between pages to get context.

A PDF is dealt as images but annotating it is different.

The scenario is that most of the time the content we're looking for is in the middle of the PDF but not always so the ability to jump to certain image is important.

I've had a chance to play with other tools like LabelStudio and CVAT which has this feature in their UI.

- Multipage Document: the ability to group a set of images into a single annotation document

- Pagination buttons to scroll and jump

- Ability to submit the annotation of those pdf images as one single task.

Hopefully Prodigy can improve its UI capabilities. It beats the other products because it's lightweight, fast, and has pipelining.

Thanks for the feedback @tue.truong10!

I was experimenting a bit with Prodigy custom interfaces to see if the requirement you described can be implemented and I must say I got really close.

It's really easy to re-format the task to have a carousel of PDF-pages as an HTML block at the bottom and select the page for annotation by clicking on one of the thumbnails.

The problem that currently does not have an easy solution (as it is a part of the front-end code that cannot be modified by end user) is how to programmatically update the image annotation spans.

The app is currently not prepared to be able to modify spans from javascript, but we are already discussing internally how to extend customization in this direction and we'll definitely update when such options become available.

Just jumping in to say that discussing this with Magda internally has let to a new interface idea that I really want to try out for the core library ![]() I think this would solve your use case and also be generalisable for different tasks and data types, which is something that's always important for new features.

I think this would solve your use case and also be generalisable for different tasks and data types, which is something that's always important for new features.

The interface could be a tabbed/carousel-style view (with keyboard shortcuts for tabbing) for documents, or any other logical collection of content, with a format basically looking like this:

{

"pages": [task, task]

}

...where task is the respective JSON format supported by Prodigy for the different interfaces. So in this case, it could be the image and optional spans, but it could also be text etc. The annotations for each page or block, however we want to call it, would then be stored in place. There would have to be some back-end logic that splits the pages into individual tasks again, so you can train from it out-of-the-box, but that feels relatively straightforward.

What I like about the idea is that it preserves the simplicity and efficiency of the card-based interfaces that's kinda important to the philosophy of Prodigy. Anyway, feel free to share your thoughts and whether this would be a good solution for your use case, and if it does actually work out like I imagine it in my head, I'm also happy to share it for early beta testing ![]()

@ines We would love to try it out. At the least we can give you some feedback.

Thank you!

Great, thanks! Still working on the UI interactions and best possible usage pattern and will keep you updated when we have an alpha version to test (which you could then install with setting the --pre option).

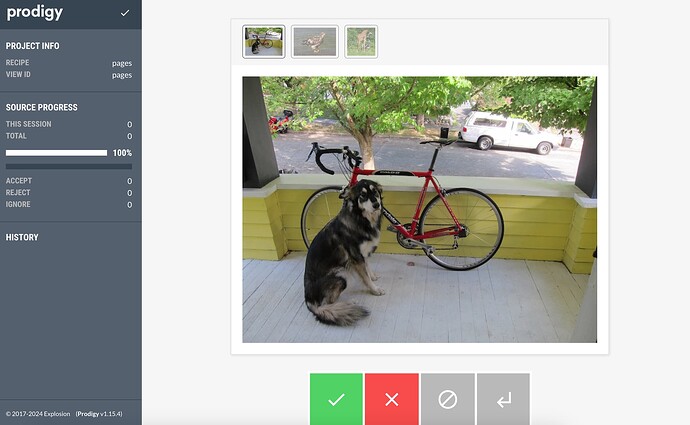

But here's a preview of how it could look:

Hey,

We're happy to update that the pages UI @ines previewed in this thread is now available with Prodigy 1.17.0! ![]()

I recommend checking our docs to see what it's capable of and how to use it ![]()

And, again - thanks for posting inspiring usage question @tue.truong10 ![]()